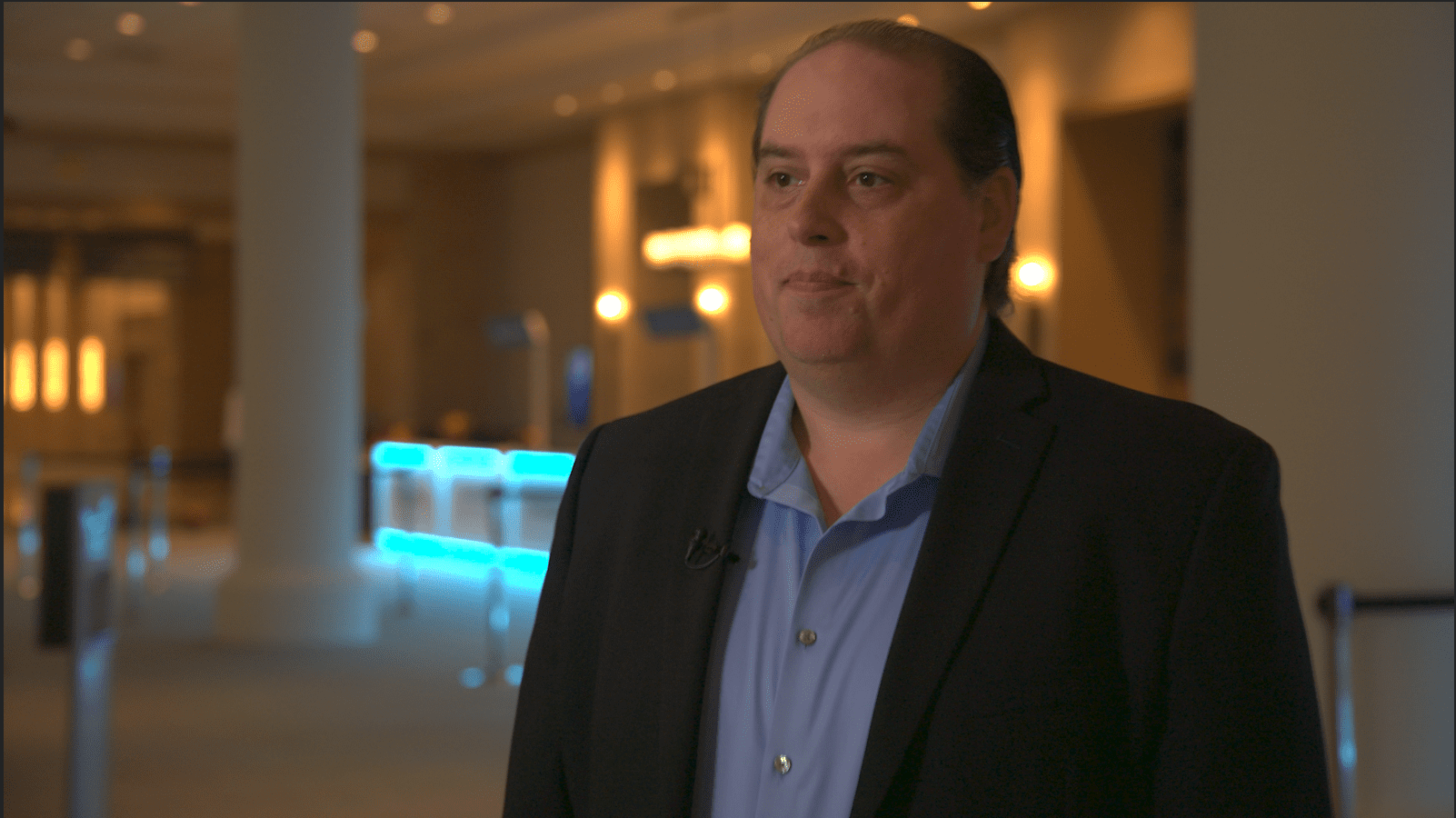

This blog is part three of a three-part blog series on “Why Public Cloud Over Private Cloud” Read part one here and part two here.

In the previous post, we focused on legacy on-premises private cloud services supporting compute, network, storage and security. Now, let’s get into more public cloud native services and concepts that are very popular and the strengths they provide.

Are Containerization and Kubernetes the solution?

Some companies believe containerization and orchestration systems such as Kubernetes solve many of the previously discussed challenges and limitations of private cloud, thereby providing a truer cloud experience on-premises. But even container platforms run within a limited pool of resources, often on top of virtual clusters and cannot scale beyond the cluster size. Usually, the clusters are semi-static themselves and when scaling the cluster must pull resources from a virtual pool which is further limited by a physical cluster size. These limitations further incumber the ability to think outside of the box and do things differently, cloud natively.

Cloud Native, cattle vs. pets!

Cloud native applications are intended to be ephemeral and therefore treated as cattle, not pets. The idea is you do not log into them, ever. If there is an issue you delete/terminate it immediately. You troubleshoot problems using logs, metrics, and tracing to understand what was occurring and narrow down issues, reproduce those issues in non-prod, then redeploy. These concepts make for a more secure and stable environment.

Ephemeral systems are intended to scale dynamically from zero or 1 minimal workloads running to hundreds or even thousands across many data centers and locales. In the public cloud, you want to only pay for what you need when you need it. You do not overprovision for spikes, but instead design a solution that can scale to keep up with the rate of consumption. If a data center failure occurs, the application can scale up in other data centers on demand to handle the load. To do this in an “PC” environment would be very costly always having to maintain sufficiently provisioned resources and clusters to handle any scaling demand in any data center. Whereas in the public cloud, you behave as if resources are limitless. The Cloud Service Provider (CSP) ensures enough of a pool of resources is always on hand. As a customer, you do not need to worry about having those extra resources, nor pay for them when they are not being used, but when you need them to scale, failover, or experiment, you have them. This is a great benefit to serverless offerings from CSP’s, now offering serverless storage, serverless container runtimes, serverless databases solutions, etc… All these implementations are intended to grow and shrink on demand, as needed, and do not require the customer to maintain a cluster or deal with the complexities of patching, maintenance, or scaling of underlying resources.

Blue/Green and Canary Deployments

During elevations in a traditional environment, existing systems are often upgraded, and need backout plans if an upgrade goes awry. The interruption to production systems is the cause of many production outages. In the public cloud, you should always deploy new systems from the ground up, ensuring no interruption to the existing running applications, and only toggling to the new environment after it is proven stable, and you only terminate the previous environment after the new environment runs well for a period ensuing all edge cases are tested. On-premises the increased demand for additional resources during and after elevations across many applications can be cost prohibitive and why most organizations have never adopted such a solution in the past. DevOps along with CI/CD deployments have taken off in popularity, and a big reason for this is the freedom gained from a near limitless supply of public cloud resources.

Micro Services and Containers and the Enterprise Reality

As applications are re-designed to be cloud-native, the monoliths are being fragmented into hundreds or even thousands of micro-services. These new services demand lighter-weight solutions than virtual machines to run them, hence the popularity of containers and Kubernetes. Developing a properly designed container platform has its own challenges, and as more companies strive to adopt these modern technologies, they often get caught up in all the hype. However, much of the hype is driven by purists who envision everything running in a container. The reality however is that enterprises have mixed workloads including on-premises physical solutions, virtual solutions, SaaS solutions, cloud IaaS solutions, cloud VM’s, managed services, unmanaged services, serverless, clustered, lambda/events, even assorted flavors of container technologies not all Kubernetes across many providers. Sometimes customers get caught up thinking because of this complexity they need to focus on moving everything to k8s to eliminate the differences.

In reality, it’s better to limit k8s (or any selected container solution) to just run the java (or other preferred language) application code containing the micro-services logic and blend it with all the other CSP services offered including databases, security/firewalls, virtual networks, load balancers, serverless solutions, streaming, queueing, and more. In other words, you aren’t moving your databases to k8s, or firewalls to k8s, instead you’re using the cloud native services wherever you can and limiting k8s to your specific application logic.

Leveraging all a CSP has to offer simplifies the complexity of a container workload, not overcomplicating it because you get caught up in the hype. Since the critical cloud native services run outside of any customer managed container service, the DevOps teams only need to focus on their business code and proper API calls to interface with the cloud services. These services become easier to adopt and gain the most value in the shortest time. When companies try and develop their own service offerings running on their own container platform it leads to more complexity, more challenges, larger teams and a greater likelihood of frustration and failure.

Event Driven and Serverless Workloads

Cloud Native solutions offer a greater adoption of event driven workloads, executing tasks dynamically, based on all kinds of actionable events such as time-of-day, file delivery, scaling operations (up or down), queueing, streaming, log scanning, metric monitoring, tracing timings, error monitoring, and a multitude of other events being daisy chained together. To support an ever-growing dynamic environment on-premises is extremely difficult, both because the foundational systems to support events do not exist, and because having unused resources pre-allocated and waiting is extremely expensive. In the Public Cloud, however, an event based eco system is fundamental to how clouds are designed, and they are built to support event driven solutions from the ground up.

Security, Micro-Segmentation

On premise organizations tend to focus on perimeter security getting in the front door, and network segmentation, placing different apps into different zones with varying levels of security to penetrate. However, since 2019 ransom ware attacks are 150% and 3x more expensive. The industry has learned a properly configured public cloud can be significantly more secure than on premises workloads, offering the ability to micro segment every application running to its own environment. Being able to create unique IAM (Identity and Access Management) roles and policies, encryption keys for data at rest, encryption certs for data in transit, dedicated firewalls to fence off every application and only allow known services and API’s in, improves the security posture immensely.

Databases and Improved Security

Data can be further protected by creating bounded context boundaries for services and just their data, removing the data from an enterprise database into separate databases for each micro-service. Fencing it off from any offending intruders and ensuring all data is encrypted with unique application specific encryption keys preventing access from unauthorized attackers. When every micro-service owns its own data, the idea of running hundreds of separate databases would be daunting on premise. However, leveraging either fully managed SQL databases or NoSQL solutions, you can create thousands of brand-new databases with a simple API call, and fully protect all of it. Leveraging many of the serverless database offerings from CSP’s you only pay for the data and access, not the infrastructure costs for virtual machines and clusters.

Big Data/Data Lakes, AI/ML, Analytics

Big Data is another significant area of change, providing many new data, storage, retrieval, and search systems, including RDBMS/SQL, No-SQL, Distributed, Columnar, JSON, Document, Graph, Warehousing, Caching, Flat-File, AI/ML, Analytics and more. It is not one size fits all, and most of the cloud native solutions use optimized file/storage solutions providing extreme parallelism and scale not only across many physical devices, but across many separate datacenters and locales.

Consider an on-premises Hadoop cluster running within a data center, with 20, 50 or even 100 nodes. You can stand up a large cluster, but does it run at 100% or near capacity 24×7? Often these large clusters are built to handle several concurrent workloads running during various business hours. It is rare for any one query to utilize the whole cluster, but to handle concurrency, you need to keep growing the cluster. In the Public Cloud you can spin up clusters on-demand and provide each team with their own cluster when needed. The data can be shared without having to be copied and moved, and having it spread over enough drives and enough data centers ensures no contention during heavy scan operations. Teams can scale up their own clusters sized from 10’s to 100’s of nodes, and with many teams doing so the aggregate is equivalent to having 1000’s of nodes running. But you only pay for them while queries and processing are running, otherwise they are unallocated and therefore zero cost. The value to the business puts AI/ML and analytics at a new level, empowering new models, and ways of running the business that was only a pipedream a few years ago.

Building a data-lake based on a distributed and open storage foundation allows data to be shared effortlessly across a wide range of tools and solutions. Instead of treating a landing area as a stopgap for transformation, the landing area for files becomes the start of a data-lake. Whether you are running Hadoop workloads with map-reduce, or Spark workloads with SQL, or streaming solutions into a data warehouse, the data-lake is key to modern AI/ML and analytics processing. Having a dynamically scalable and elastic storage foundation where you do not need to worry about running out of space, and where you can copy data on the fly to experiment, lock data down with proper access policies and define proper life-cycle policies to the data empowers companies in ways they have never had previously.

This concludes the blog series on “Why Public Cloud Over Private Cloud”. I hope you enjoyed this high-level discussion on the value of Public Cloud and I welcome an opportunity to hear your comments and provide any additional clarity you like.