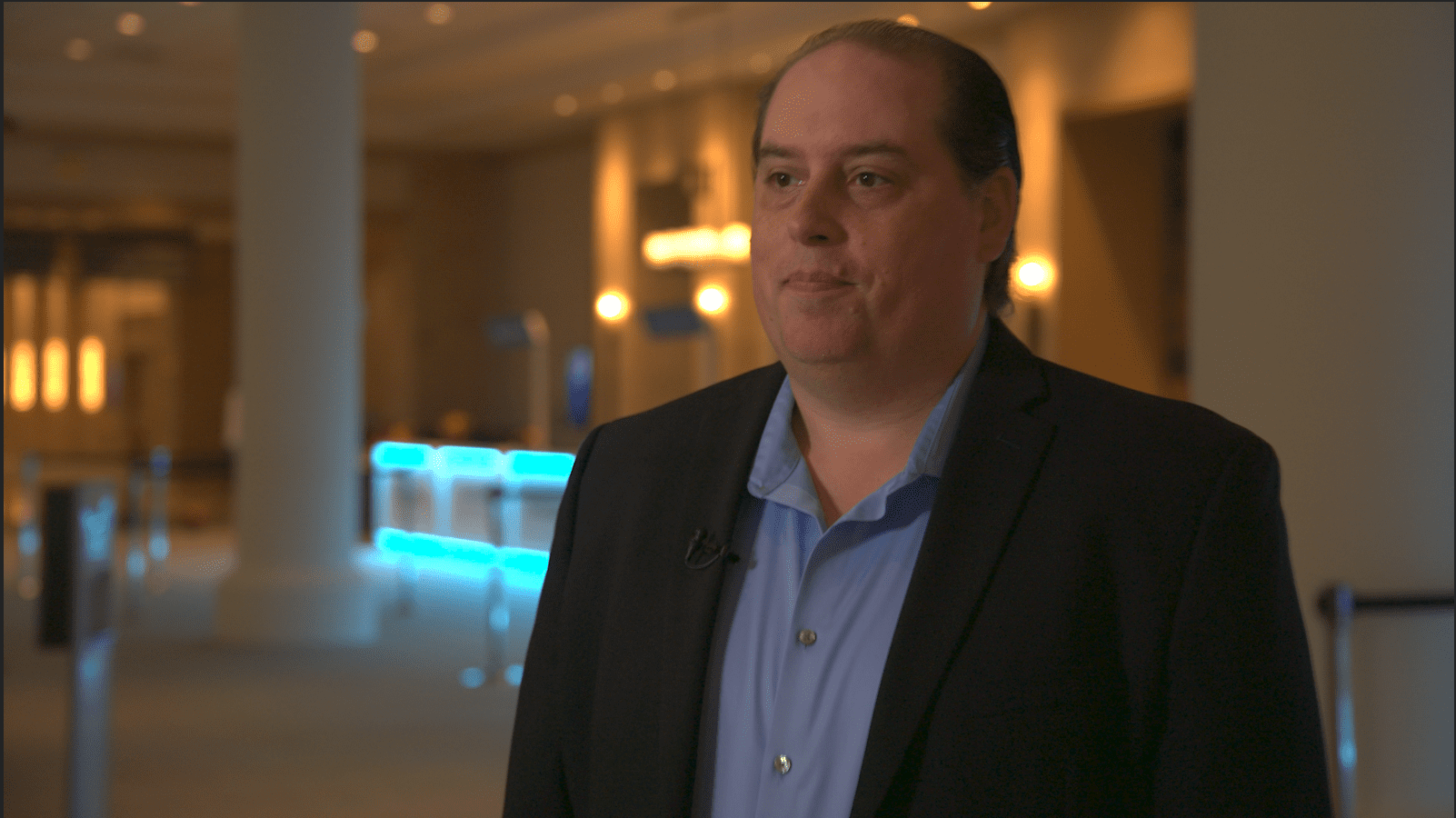

At Presidio, no technology solution is deemed “final” – instead we focus on a continuous cycle of refinement to extend cost-effective performance at every turn. That’s especially true in the rapidly shifting field of artificial intelligence (AI), where advancements are pushing the limits of today’s most robust hardware and software.

As part of that commitment to constant improvement, Presidio has teamed with its longtime technology ally Intel to test its emerging solutions in a variety of generative AI-focused use cases. Recently, we tested the performance of 4th Generation Intel® Xeon® Scalable processors compared to GPUs in machine learning for facial recognition and the results were promising.

Next, we wanted to evaluate the feasibility of running certain large language models (LLMs), in the range of 7 billion parameters, with private data in RAG architecture on 4th Gen Intel Xeon Scalable CPUs and how they compare in price/performance against GPUs.

In addition, we wanted to determine the impact of using Intel® Advanced Matrix Extensions (Intel® AMX), which is a built-in AI accelerator within Intel Xeon Scalable processors. Test results showed that Intel AMX delivered a clear performance boost.

Finding an efficient solution for inferencing on LLMs using private data

Enterprises often consider running LLMs using their private data in RAG architecture to avoid uploading their data in hosted managed services within cloud platforms, to preserve confidentiality. In such circumstances, organizations are now recognizing the potential of using GenAI with their private data employing RAG architecture for their own benefit, such as running internal applications for increased productivity.

Inferencing on LLMs using private data in a cost-effective and efficient way allows for:

- Data privacy, keeping data safe and avoid having to send the data to hosted Gen AI managed services in the cloud. Alternatively, customers can also implement this architecture in their own data centers or in private cloud.

- Reduced cost, eliminating the need for expensive accelerated hardware.

- Positioning for the future as AI extends its growth and acceptance.

Traditionally LLM training and inference have been run on GPUs, owing to their robust parallel processing capabilities. However, inferencing LLMs on GPUs is expensive – and so the goal of our test was to determine whether the Intel Xeon Scalable processor with Intel AMX could prove to be a practical, performant and cost-effective alternative.

Why test Intel CPUs with Intel AMX and INT8 quantization for inferencing using LLMs

Presidio has long seen the potential of the 4th Gen Intel Xeon Scalable processor. Launched in early 2023, it is designed with 14 built-in acceleration features to offload specific demanding workloads, freeing up CPU compute cycles for other tasks.

One of these accelerators, Intel AMX, is designed to improve processor performance of deep-learning training and inference. Offloading the CPU enhances its ability to perform the matrix multiplication that AI models require. This feature makes it ideal for natural-language processing (NLP), recommendation systems, image recognition and other such workloads.

As noted, Intel AMX provides acceleration of matrix multiplication functions for INT8 and BF16 data precision types. In addition, Presidio explored the benefit of using Intel AMX while quantizing the model weights to INT8 precision type. For those unfamiliar, INT8 is a data type which can be used for inferencing when single precision or double precision are not needed for the usage model. This is particularly effective for language models and computer vision models where speed and efficiency are prioritized over precision.

The weight only quantization technique for the model is achieved using Intel’s extension for Pytorch with a few lines of code.

Our process in testing CPUs versus GPU for LLMs

The model used is Intel/neural-chat-7b-v3-3 from Huggingface. Using an AI Chatbot appropriate for our purpose, Presidio posed two types of questions:

- Generic – consisting of broad, general queries, such as summarizing text, answering questions, sentiment analysis and other tasks that don’t require knowledge of private data.

- Retrieval-augmented Generation (RAG) – RAG complements generic information with highly specific questions that draw from a customer’s database and not the outside world – for example, using customer-specific private data for personalized question answering.

Presidio then measured two industry-standard metrics:

- Tokens per second – Similar to words-per-second, the average throughput of the response.

- Time to first token – The time taken to generate the first token, measuring efficiency and response latency. It should be noted that next token latency is significantly smaller than the first token latency, which includes initialization overhead.

Presidio ran the test using three separate configurations:

- GPU instances – Specifically the p3.2xlarge in AWS.

- CPU instances without using Intel AMX – 4th Gen Intel Xeon Scalable processor, which is the m7i.8xlarge EC2 instance, without activating Intel AMX acceleration.

- CPU instances using Intel AMX and INT8 Quantization – the 4th Gen Intel Xeon Scalable processor, which is the m7i.8xlarge EC2 instance, with Intel AMX activated and using INT8 quantization.

The test was conducted using complex prompts with larger input sizes of 600 to 1,000 tokens.

Test results and key findings

| Intel Xeon CPU with Neural chat – No AMX | GPU Instance p3.2xlarge with Neural Chat | Intel Xeon CPU with Neural chat – INT8 Quantized for AMX | |

| Generic Question | Tokens per second – 5-6

Time to first token – ~750ms |

Tokens per second – 100

Time to first token – <50ms |

Tokens per second – 25-27

Time to first token – <200ms |

| RAG Question

[Final input token size – 600-1000 tokens] |

Tokens per second – 3-4

Time to first token ~6s |

Tokens per second – 120

Time to first token – <500ms |

Tokens per second – 35-40

Time to first token ~2-4s |

- GPUs provided higher throughput and lower latencies – For use cases that require high precision data types, continuous throughputs with lower latencies, GPUs are the clear choice. This is important for certain use cases like financial markets, physics research and high precision scientific simulations. In contrast, use cases like NLP produce acceptable performance and latencies while running the LLMs on Intel Xeon Scalable processors with Intel AMX. The throughput is well beyond the typical human reading speed making it suitable for chat applications and productivity tools. This approach also demonstrates a strong price-performance benefit.

- Intel CPUs tested produced only a modest 2-second or less first token latency – For the NLP use case we tested, the flexible and available 4th Gen Intel Xeon Scalable processor offers a cost-effective option.

- Intel AMX and INT8 quantization boost performance – Testing showed a notable performance boost for both generic and RAG prompts when testing this option. Quantization brings an infrastructure bonus: it can run LLM inference in a smaller hardware footprint, conserving the memory and compute resources needed to operate.

Overall, it comes down to your requirements for delivering an AI-powered experience to the end users. Depending on the use case and the requirements for speed, accuracy, and cost, there is a price/performance analysis that is meaningful to address for selecting the optimum hardware to run LLM inferencing; the same holds true when customers are doing continuous inferencing as a dedicated AI workload versus using the same infrastructure for sparse inferencing and other general-purpose workloads.

Presidio envisions the potential of Intel CPUs with Intel AMX for LLMs

Given the test results, Presidio envisions many use case scenarios where the Intel CPU with Intel AMX may be a better option for running LLMs. For instance:

- Virtual Data Analyst – Imagine a salesperson wanting specific information about their top three customers worldwide. Rather than imposing on a data scientist to spend hours researching the topic, the salesperson can query the LLM and access the data in seconds, saving everyone valuable time.

- Internal chatbot – Now employees can retrieve, organize and make better use of company-specific information quickly and in unique ways, all while keeping sensitive or privileged data secure. Separate chat experiences can be spun up for customers, industry peers, general audiences and more.

These examples are just the beginning. For companies looking to future-proof their IT capabilities for the age of AI, 4th Gen Intel Xeon Scalable processors are fully capable of inferencing on LLMs privately, without a costly infrastructure investment in GPUs. What’s more, those latest processors are equipped with Intel AMX acceleration built in.

And this is just the beginning: already the Intel® Gaudi® 2 AI accelerator is driving improved deep learning price-performance and operational efficiency for training and running state-of-the-art models, from the largest LLMs to basic computer vision and NLP models. In keeping with our continuous cycle of refinement and innovation, Presidio plans to evaluate Intel Gaudi 2 to determine how it can accelerate our customers’ Gen AI transformation journey.

Consider the advantages you can achieve by inferencing on LLMs privately on CPUs. Start the conversation by bringing us your use case; we’ll help you envision a viable plan for LLM performance and a richer engagement for everyone.