Introduction

This blog provides an overview about using automation in business intelligence. It describes the advantages and setup for applying the DevOps concepts such as source control and continuous deployment to deploy Tableau entities from Bitbucket repositories to Tableau Server/Online.

Benefits of Business Intelligence (BI) Automation

In this section, we will investigate some of the crucial business processes involved in BI automation.

- Insights Discovery Automation—It helps in prioritizing and ranking insights by significant ones which would aid in decision-making for stakeholders

- Analyzing data from disparate systems—It helps to streamline data flow from one system to another and helps to collect data into a single source of truth.

- Democratization of BI—You can make automated reports on a regular, predictable schedule, such as every Monday. Certain events, such as a logistics backlog that has grown to a critical level and requires correction, can also trigger them.

Typical Scenario for BI Automation

Take into account the following entities that require deployment across multiple Tableau locations:

- Tableau workbook

- Published data source—connected to MySQL

For instance, let us consider that we have three Tableau Online sites corresponding to three different environment stages, such as Dev, QA, and UAT. We will build a custom workflow that will help us achieve the proposed use case.

Source Control

It is the process of tracking revisions to the code during development.

- It will keep track of information such as: When did the revision take place, who made the revision, and what changes are made in each revision?

- It keeps a running history of all versions, enables rollback to any previous code version if necessary, and enforces documentation at each stage of development.

- It removes the developer’s responsibility for storing and managing several iterations of code.

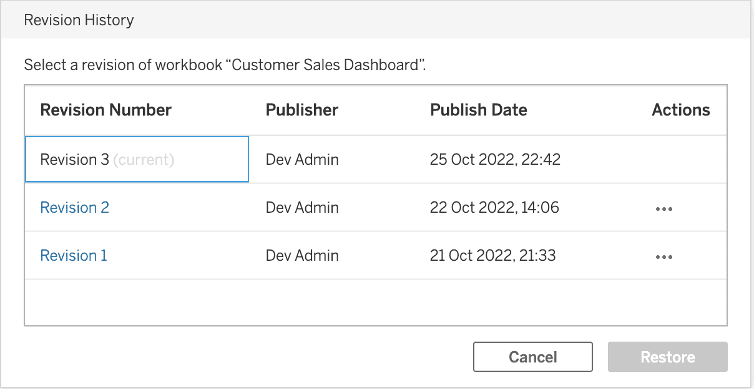

Source Control in Tableau

Tableau Online and Server have the Revision History feature enabled by default. Tableau Server has 25 revisions of workbooks, and data sources can be stored, while Tableau Online has a maximum of 10 revisions on the Tableau Online Platform, which will have the publisher’s name and date.

Limitations of Source Control in Tableau

- It does not support adding a title or description to revisions. Without this information, the developer has no insight into the changes made to the workbook on each revision. The developer must rely on memory or a manually written log to document the workbook’s state at each revision.

- If a workbook/data source is deleted from Tableau Server/Cloud, the revision history is also deleted and non-retrievable, even if the deleted workbook is republished. The only way a deleted workbook can be retrieved is to restore the entire Tableau server from a backup taken when the workbook still existed. (Note: This option is not available for Tableau Cloud.)

- The limit of setting the number of revisions (10 or 25) may be conservative for some projects. In some organizations, Tableau Server administrators may set the limit below the maximum threshold to preserve storage space.

Extending Source Control for Tableau with Git:

The benefits of tracking the lineage of Tableau objects, such as workbooks and data sources, in Git are:

- The title, date, and description indicate the workbook’s revisions. It makes developers to easily audit and understand the state of the workbook at each stage.

- You can store an unlimited number of revisions and revert to any prior revision. This could be beneficial for large projects with many feature updates and revisions.

- If you delete the workbook from the Tableau production environment, it protects the revisions. There is now a separation between the Git repository and the Tableau platform, meaning that if a workbook is deleted from Tableau Server/Online, the workbook and revisions persist in the Git repository until they are deleted.

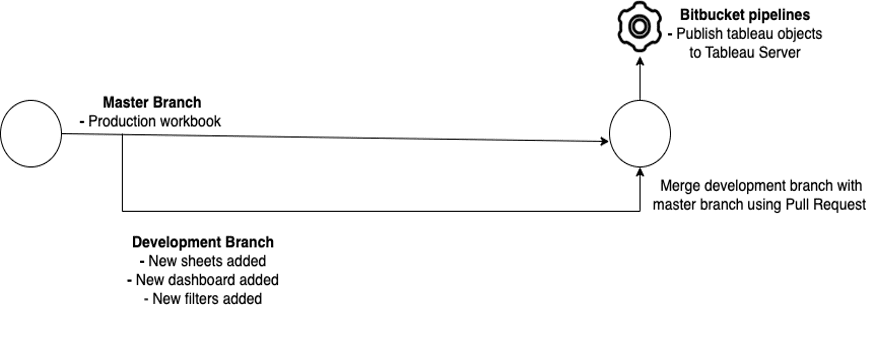

Continuous Deployment

In software development, continuous deployment is a release strategy whereby production-ready code is subjected to automated testing and, upon passing, is automatically released to the production environment.

- In Tableau, the underlying XML code of workbooks or data sources is generated by the Tableau application, so the automated testing phase of continuous deployment is less applicable.

- More relevantly, continuous deployment addresses the separation between the production-ready code (e.g., the latest revision of our Tableau workbook on Github) and the production environment (Tableau Server/Cloud) by deploying automatically from Bitbucket (source control) to Tableau Server/Cloud.

Tableau Server REST API

Tableau Server’s REST API helps manage resources in Tableau programmatically through HTTP by creating personal access tokens. It is covered in a Python module called tableauserverclient.

Bitbucket Cloud REST API

The design of the Bitbucket Cloud REST API allows for programmatic interaction with Bitbucket. A Python module named atlassian-python-api provides this functionality.

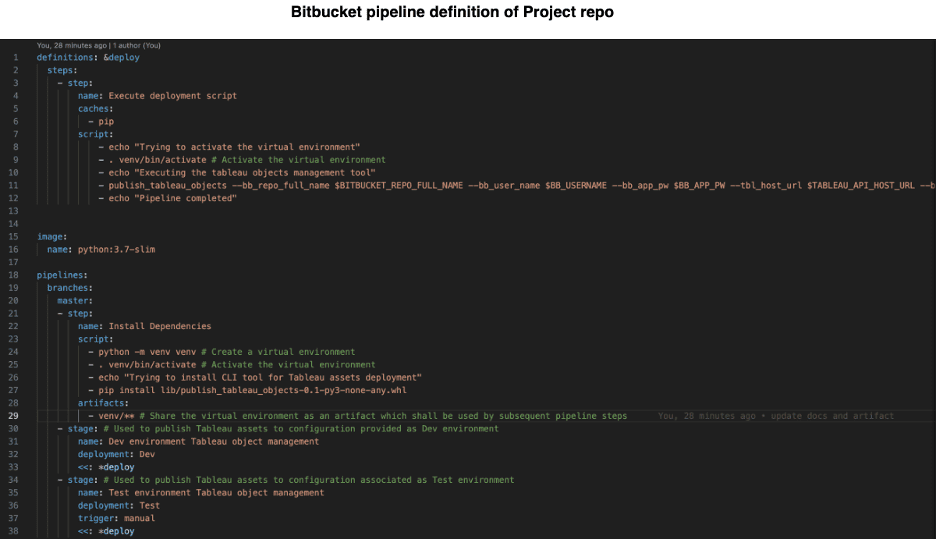

Bitbucket pipelines

Bitbucket integrates Bitbucket Pipelines, a service that automatically builds, tests, and deploys code based on the configuration code (bitbucket-pipelines.yml). It will spin up virtual machines in the cloud, customized and configured for our needs, based on the configuration code.

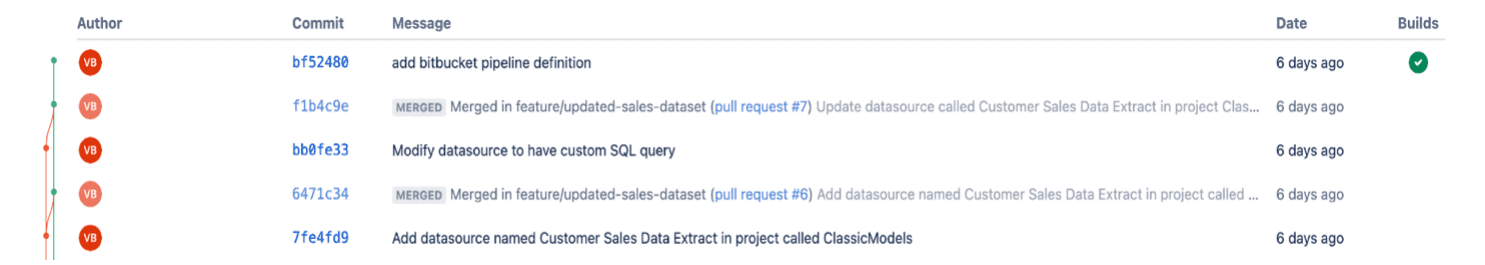

Continuous Deployment with Bitbucket Pipelines

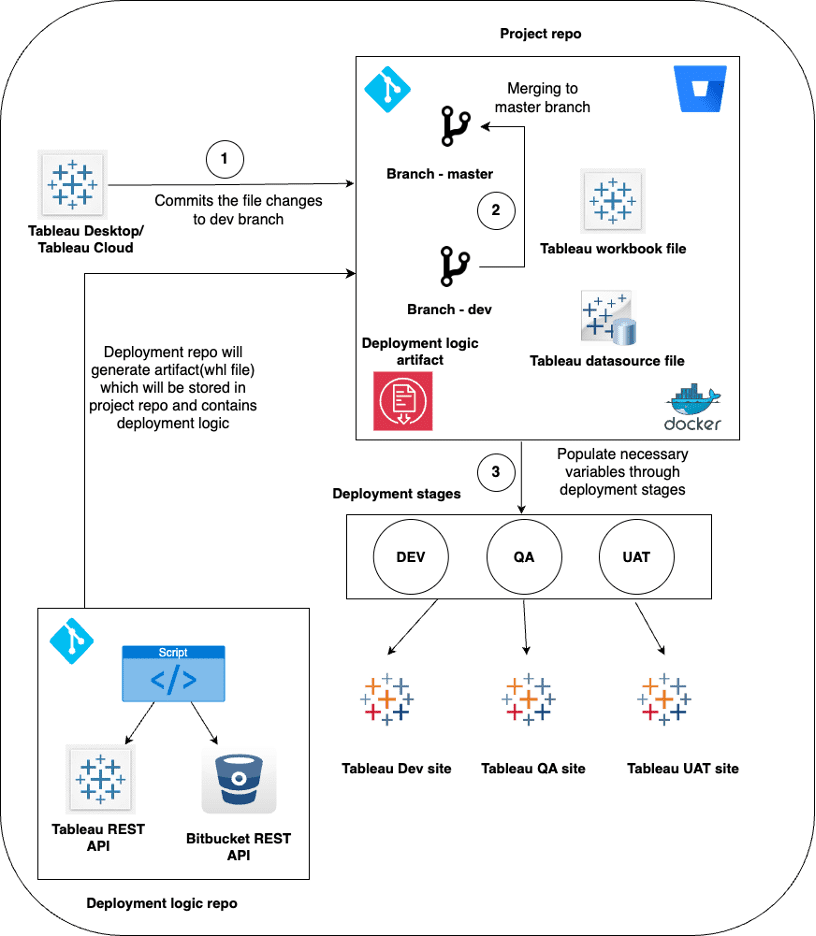

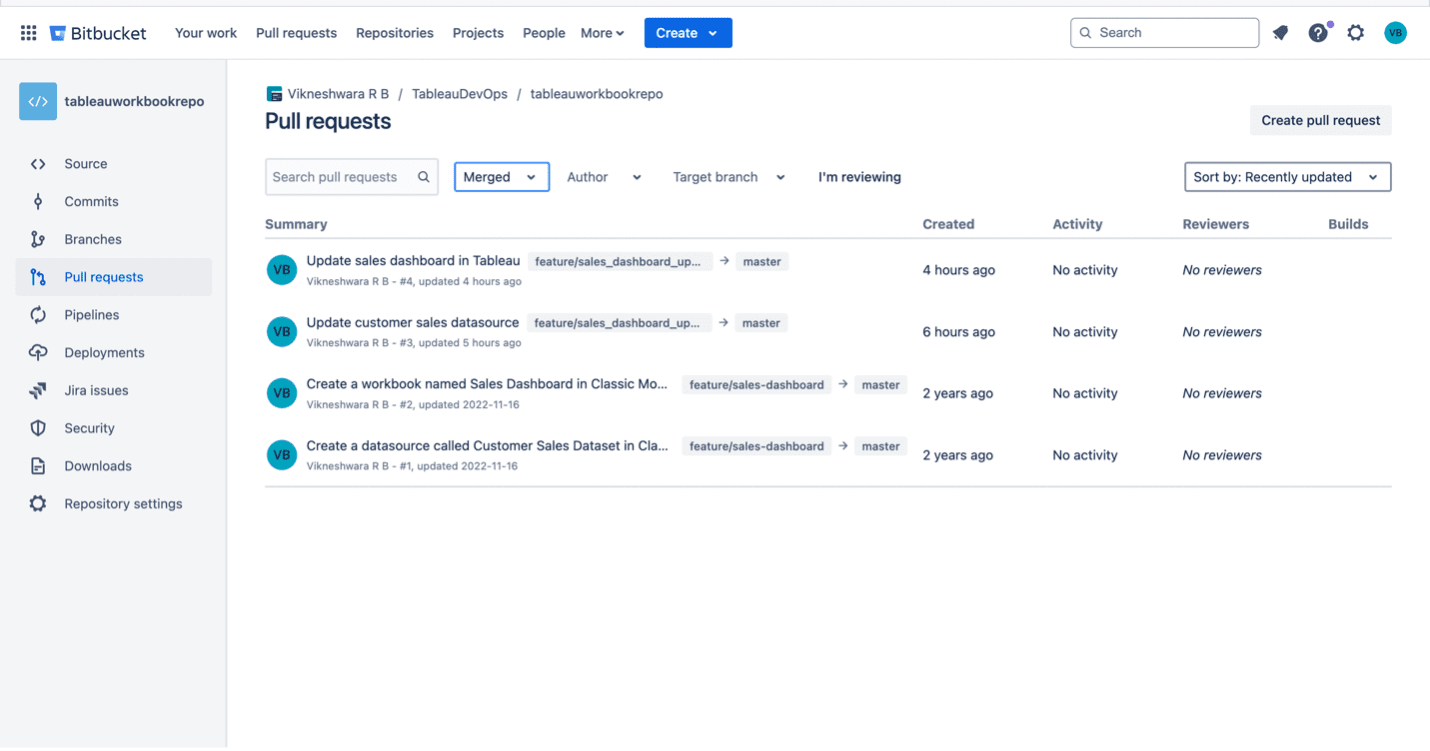

We will have two Bitbucket repositories that will perform the following operations:

- Project repo – Contains workbook and data source files

- Deployment logic repo – Contains deployment logic used to read pull request details from the project repo using the Bitbucket REST API and publish objects to the Tableau server using the Tableau REST API.

- We will author Tableau entities, such as workbooks or data sources, on Tableau Desktop or Cloud.

- We will commit these entities to a lower branch (e.g., dev branch) in the project repo and create a PR for a higher branch (e.g., master branch).

- Upon merging the PR, the project repo will initiate a Bitbucket pipeline.

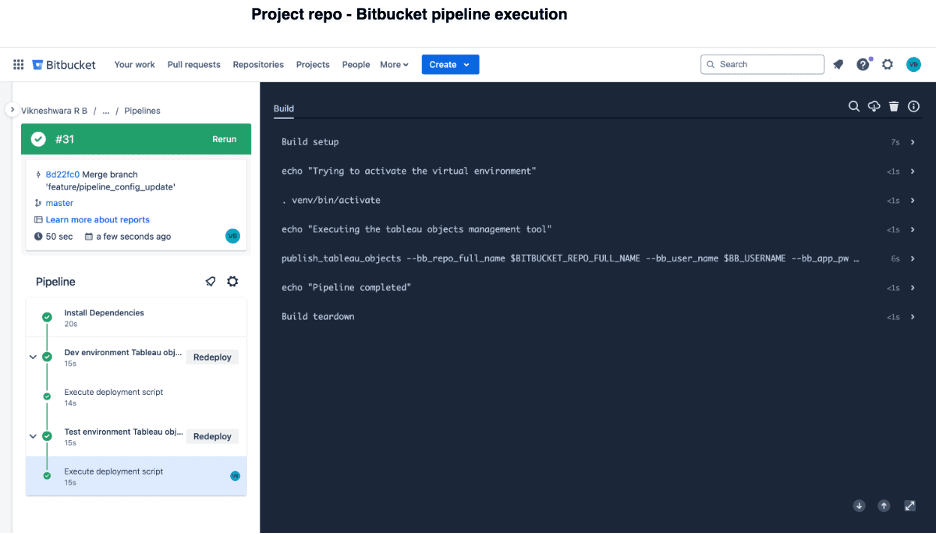

The system operates automatically for lower deployment stages (DEV) but prompts for manual confirmation for higher deployment stages (QA, UAT, etc.). - Bitbucket assigns repository and deployment variables to each stage.

- We secure Tableau credentials using secured variables under deployment stages in Bitbucket.

Project repo pipeline execution flow

- Project repo will install a CLI tool that contains the deployment logic.

- The deployment logic will retrieve the PR details from the Project repo and identify any newly added or modified files.

- The manipulation of Tableau object metadata files will depend on the deployment stage in Bitbucket.

- We will publish them on Tableau Server or Cloud, depending on the provided configuration.

The following code repositories implement the proposed workflow:

Benefits of proposed workflow:

- Eliminates the possibility of manual errors

- Configure changes for bulk scales with ease.

- Maintain a record of deployment history (version control included).

- Preserve documentation of each deployment, audit, and trouble spot quickly.

- Integrate quality checks and watermarking into your ongoing process.

- We maintain deployment logic in a separate repository for customization, reusability, and complete workflow control.

- Publishing of Tableau objects extended to any number of Bitbucket repositories by including the pipeline definition in every project repo.

Limitations of proposed workflow:

- Since packaged workbooks(twbx) and data source extracts(tdsx) files are binary-based, source control is less useful.

- Only items authored in the Tableau Desktop 2022.2 version are compatible and tested for manipulation in Tableau files.

- Python code implements deployment logic, making it time-consuming to modify for complex requirements.

Conclusion

The proposed workflow for deploying Tableau objects using Bitbucket pipelines offers an automated solution that significantly reduces manual errors and enhances version control and streamlines the deployment process across multiple environments. By integrating source control with continuous deployment, businesses can achieve greater operational efficiency, allowing for easier scalability and customisation. However, limitations such as challenges with binary files, version-specific compatibility, and the complexity of deployment logic adjustments highlight the need for careful planning and adaptation in more intricate use cases. Despite these challenges, this approach provides a solid foundation for automating business intelligence deployments in a DevOps framework.

References

- App password permissions in Bitbucket Cloud

- Tableau Server Client & the REST API to automate your workflow

- Tableau Personal Access Tokens