Lambda has a size limit of 6MB on request and response payloads for synchronous invocations. This impacts the transmission and reception of data from a Lambda-backed API endpoint.

Lambda Quotas: https://docs.aws.amazon.com/lambda/latest/dg/gettingstarted-limits.html

Traditional request-response models require the complete generation and buffering of the response before sending it back to the client. This can delay the time to first byte (TTFB) performance while the client waits for the response. Web applications are especially sensitive to TTFB and page load performance.

To address the limitations described above, AWS Lambda has introduced support for streaming the response payload. Response streaming is an emerging invocation technique that allows functions to gradually stream response payloads to clients.

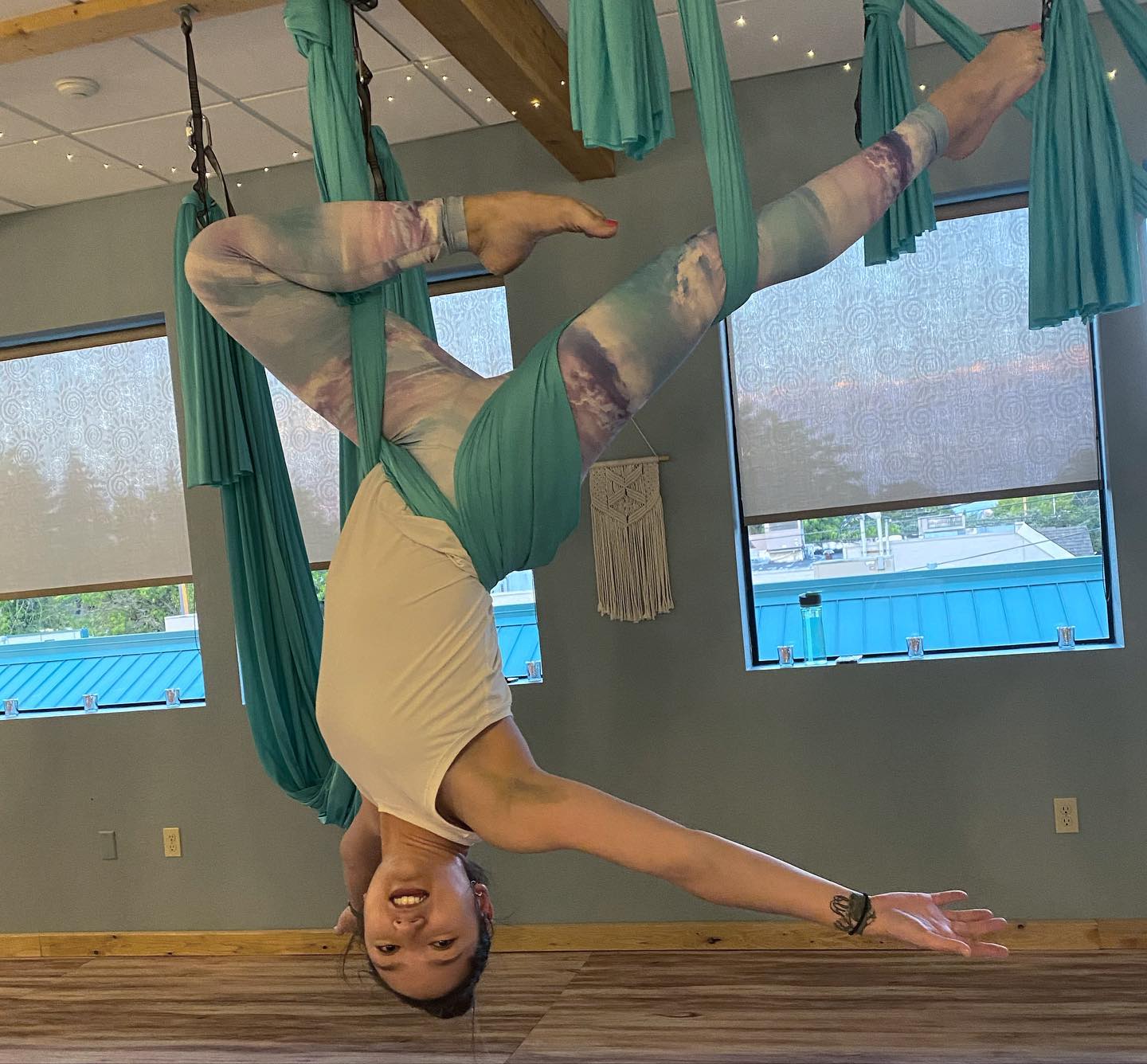

How do I configure the lambda function to return a streaming response?

To make it work, Lambda introduces the streamifyResponse decorator, which the function code must wrap around.

Check out the below example for a simple streaming response.

exports.handler = awslambda.streamifyResponse(

async (event, responseStream, context) => {

responseStream.setContentType("text/plain");

responseStream.write("Hello, world!");

responseStream.end();

}

);Check the function signature above:

async (event, responseStream, context)- The

requestStreamcontains a stringified version of the invocation event. responseStreamis a writable stream object. Any bytes you write to theresponseStreamobject will be streamed to the client.- The

contextobject remains unchanged from before.

Write to the response stream

The responseStream object implements Node’s Writable Stream API. This offers a write() method to write information to the stream. However, we recommend that you use pipeline() wherever possible to write to the stream. This can improve performance, ensuring that a faster-readable stream does not overwhelm the writable stream.

End the response stream

When using the write() method, you must end the stream before the handler returns. Use responseStream.end() to signal that you are not writing any more data to the stream. This is not required if you write to the stream with pipeline().

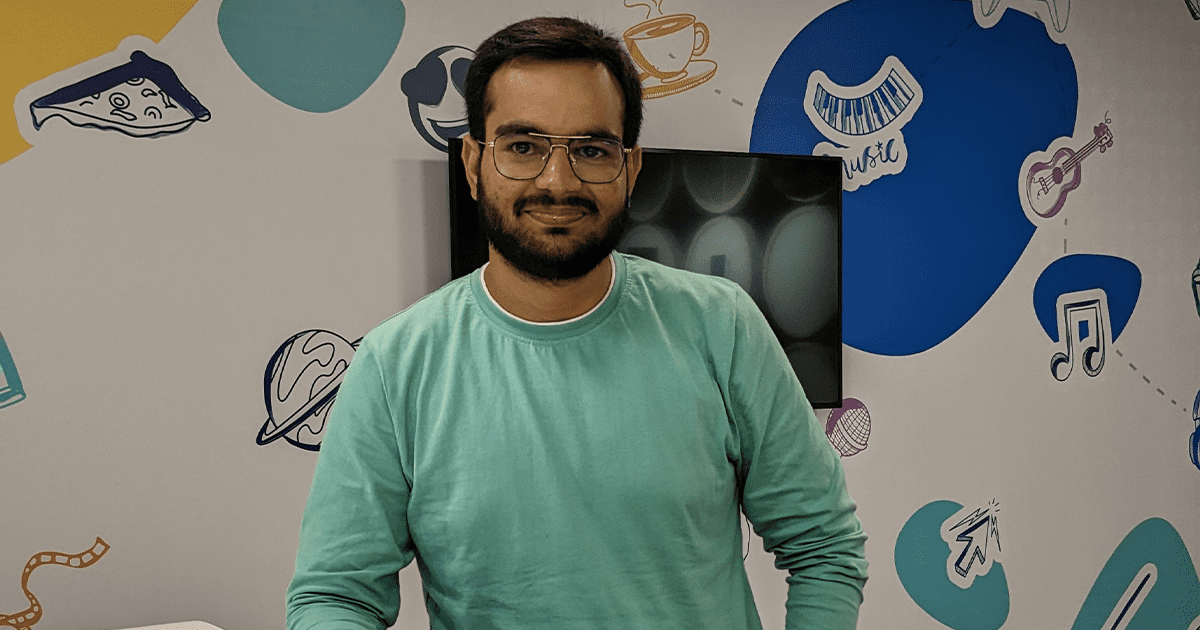

Check out the following example to read a large object from S3 and return the response as a stream.

This not only allows Lambda functions to progressively stream responses back to the client to reduce TTFB, but also allows you to exceed the standard 6MB payload limit.

"use strict";

const AWS = require("aws-sdk");

const util = require("util");

const stream = require("stream");

const pipeline = util.promisify(stream.pipeline);

AWS.config.update({ region: process.env.AWS_REGION });

const s3 = new AWS.S3();

module.exports.handler = awslambda.streamifyResponse(async (event, responseStream, context) => {

const params = {

Bucket: process.env.MEDIA_BUCKET,

Key: "samples/large_media.pdf",

};

console.log("Creating a S3 ReadStream");

const requestStream = s3.getObject(params).createReadStream();

const metadata = {

statusCode: 200,

headers: {

"Content-Type": "application/pdf",

},

};

console.log("Streaming PDF file via lambda function URL");

responseStream = awslambda.HttpResponseStream.from(responseStream, metadata);

await pipeline(requestStream, responseStream);

});

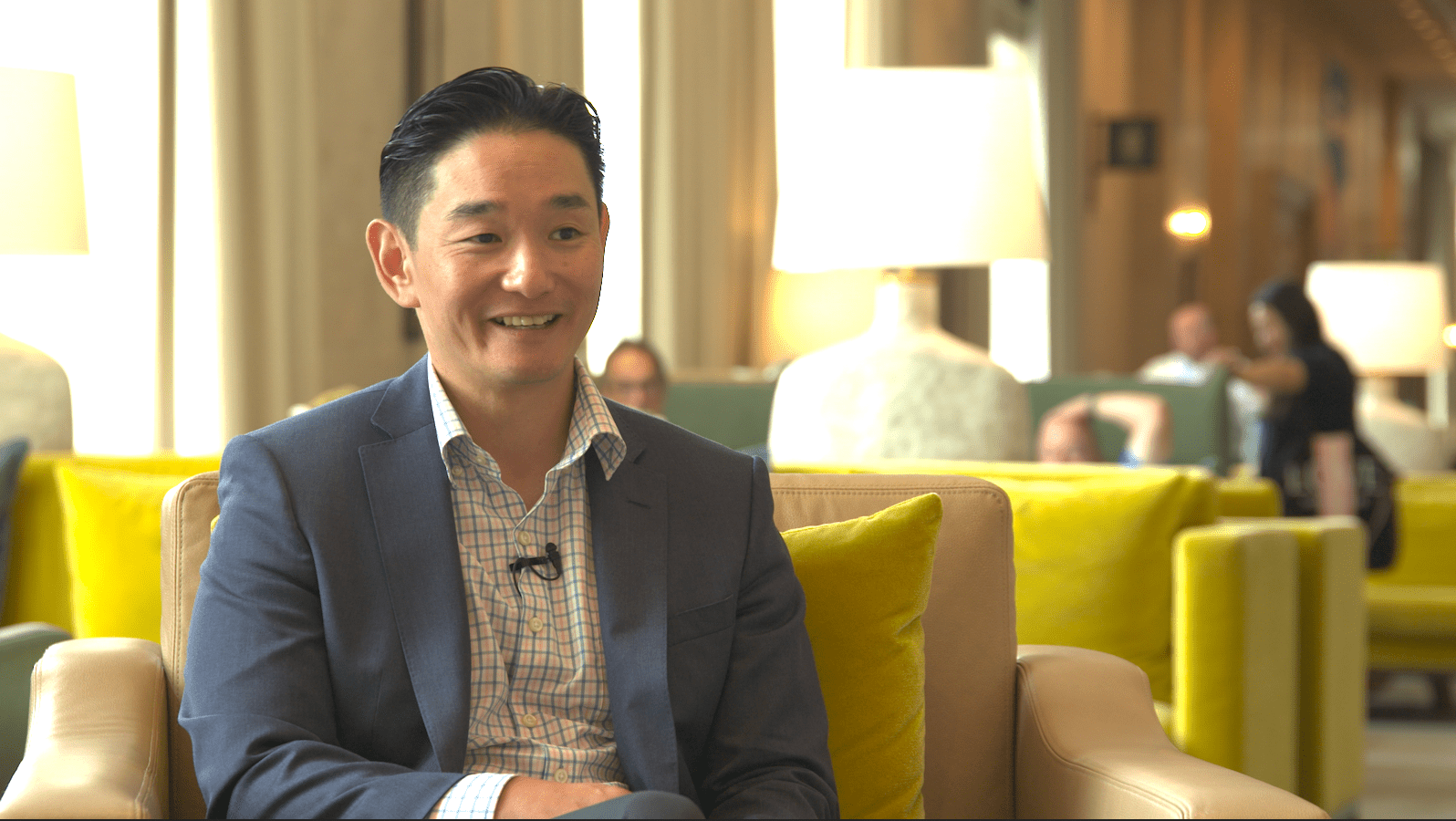

Limitations on Lambda Streaming Response

- There’s a default limit of 20MB. Fortunately, you can raise this soft limit via the Service Quota console or by raising a support ticket.

- Streamed responses are not supported by API Gateway’s LAMBDA_PROXY integration. You can use HTTP_PROXY integration between API Gateway and the Lambda Function URL, but you will be limited by API Gateway’s 10MB response payload limit. Also, API Gateway doesn’t support chunked transfer encoding, so you lose the benefit of a faster time-to-first-byte.

- ALB’s Lambda integration doesn’t support streamed responses.

- Response streaming currently supports Node.js 14.x and subsequent managed runtimes.

In conclusion, AWS Lambda’s traditional request-response model has limitations on payload size, affecting API functions and data exchange capacities. To address this, AWS Lambda now supports response payload streaming, enabling the progressive transmission of data back to clients. By utilizing the streamifyResponse decorator and implementing the necessary configurations, Lambda functions can stream responses, overcoming payload size constraints and enhancing time-to-first-byte (TTFB) performance. However, it’s important to note certain limitations, such as default payload size restrictions and compatibility constraints with API Gateway and ALB’s Lambda integration. Despite these limitations, Lambda response streaming offers a solution to efficiently handle large data transfers, particularly in web applications.

References

- https://aws.amazon.com/blogs/compute/introducing-aws-lambda-response-streaming/

- https://docs.aws.amazon.com/lambda/latest/dg/configuration-response-streaming.html