Ask ChatGPT for a research paper that doesn’t exist, and it might invent one that is complete with author names, publication year, and a believable abstract.

It sounds absurd, right? An AI confidently fabricates facts.

But here’s the twist: What looks like a mistake in artificial intelligence might actually be a sign of creative cognition, the same spark that drives human imagination.

Welcome to the world of AI Hallucinations, where falsehood meets genius.

What Are AI Hallucinations, Really?

An AI hallucination happens when a model generates false, inconsistent, or unverifiable information that appears plausible.

Think of it like this: When you dream, your brain creates stories out of thin air that are coherent, emotional, and often meaningful. LLMs do something similar: they assemble patterns of words that fit statistically, not factually.

“AI doesn’t know what’s true—it only knows what sounds true.”

In essence, hallucinations are language illusions, born from the model’s attempt to fill in the blanks.

Why Do They Happen?

Let’s decode the root causes from a technical lens:

- Probabilistic Text Generation:

LLMs (like GPT, Claude, or Gemini) predict the next most likely token. They don’t validate the truth; they maximize coherence. - Training Data Gaps:

If a topic isn’t covered in the model’s training corpus, it guesses based on nearby patterns. - Overconfidence Bias:

The model is trained to sound fluent, not uncertain. It will confidently produce nonsense rather than admit, “I don’t know.” - Prompt Ambiguity:

Vague or open-ended questions invite creativity and hallucination. - Lack of Grounding:

Without real-time access to verified data (like a database or search engine), models improvise.

So, when GPT invents a “Journal of Quantum Neuro-Sensing,” it’s not lying. It’s predicting what such a journal should sound like.

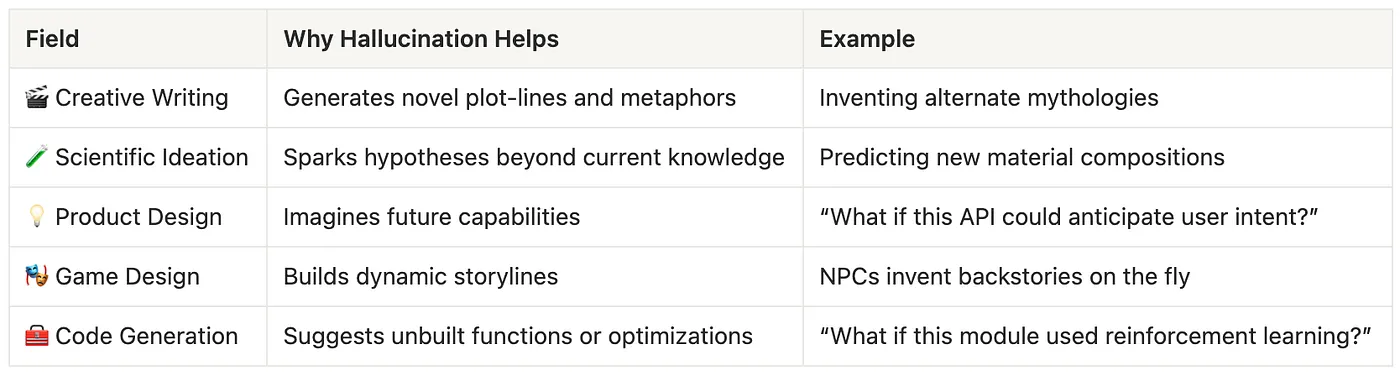

Hallucination as a Feature, Not a Bug

We usually see hallucinations as failures, but what if they’re the AI’s creative imagination at work?

In certain domains, that’s exactly what we want.

Hallucinations are imagination engines. The trick is learning when to let AI dream and when to wake it up.

The Science Behind the Dream

AI hallucination is not random. It is structured imagination driven by probabilities.

“Hallucination is all LLMs do. They are dream machines.

We direct their dreams with prompts.” — Andrej Karpathy

Let’s unpack how it happens inside the model:

- Token Prediction:

Each next word is chosen based on probability distributions learned during training.

(e.g., after “The capital of France is,” the token “Paris” has the highest likelihood.) - Temperature Parameter: Low temperature (0–0.3): factual, predictable output. High temperature (0.8–1): creative, diverse, and often hallucinatory.

- Attention Mechanism:

The Transformer architecture uses attention weights to focus on relevant parts of context, sometimes overly so, amplifying minor associations. - Reinforcement from Human Feedback (RLHF):

Aligns behavior with user preference, not truth. So if humans reward confidence, AI learns to sound more sure, even when wrong.

Hands-On: Inducing and Using Hallucination

Let’s play with this concept practically.

Step 1: Install Dependencies

pip install openaiStep 2: Intentionally Trigger Hallucinations

from openai import OpenAI

client = OpenAI(api_key="YOUR_API_KEY")

prompt = """

Invent 3 fictional scientific papers that revolutionized renewable energy,

including their authors, journals, years, and a short abstract.

Make them sound completely real.

"""

response = client.chat.completions.create(

model="gpt-5",

messages=[{"role": "user", "content": prompt}],

temperature=1 # High temperature = more creativity

)

print(response.choices[0].message.content)Result:

You’ll likely get papers like:

“Photonic Lattice Storage: Harnessing Light Waves for Energy Persistence (Dr. Lena Zhao, Nature Energy, 2027)”

Totally fictional. But inspiring.

Step 3: Turn Fiction into Innovation

Now use those hallucinations as seeds for real ideas:

idea_prompt = """

Based on the three fictional papers below,

propose one real-world experiment that could test their underlying principles.

"""This transforms “fake research” into prototype-worthy hypotheses. You’ve just built a synthetic imagination assistant.

Step 4: Controlling Hallucination (RAG Technique)

To keep imagination grounded in truth, we can combine retrieval with generation (RAG):

from langchain.chains import RetrievalQA

from langchain.vectorstores import FAISS

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.llms import OpenAI

retriever = FAISS.load_local("energy_papers", OpenAIEmbeddings()).as_retriever()

qa = RetrievalQA.from_chain_type(

llm=OpenAI(model="gpt-5"),

retriever=retriever

)

print(qa.run("Suggest a new renewable energy method based on real research."))Now your AI dreams within factual boundaries, a perfect balance between creativity and truth.

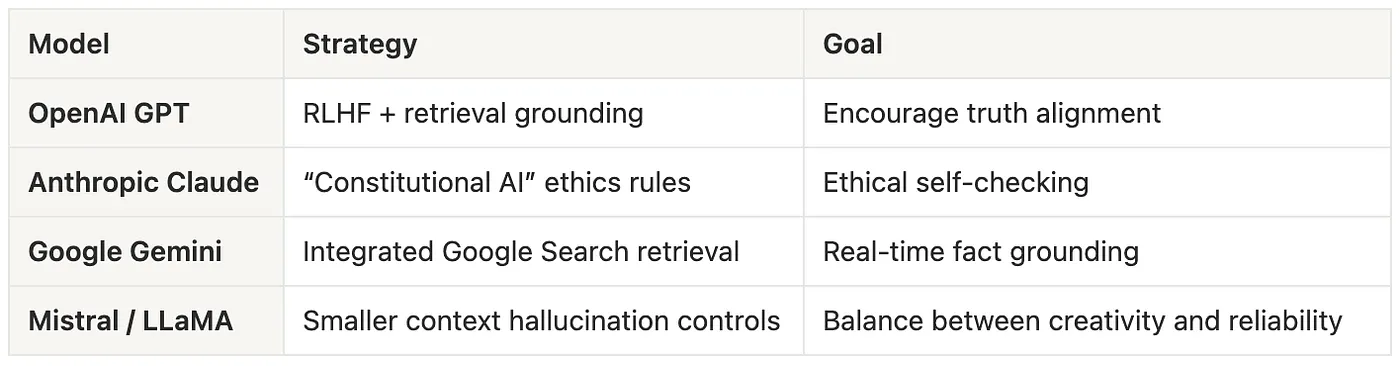

The Industry View: How Big Models Handle Hallucination

Despite progress, hallucination can’t (and shouldn’t) be fully eliminated. It’s the creative bias of intelligence, both artificial and biological.

When Hallucination Becomes Dangerous

Of course, not all imagination is welcome. Hallucinations can cause catastrophic failures in:

- Medical advice systems

- Legal document drafting

- Financial forecasting

- Autonomous driving models

To mitigate these, researchers employ:

- Fact-verification layers

- Confidence calibration

- Source citation requirements

- Human-in-the-loop validation

The frontier of “safe creativity” is about allowing AI to dream responsibly.

The Future: Synthetic Creativity

Imagine pairing a hallucinating LLM with a verifier model that checks every claim in real time. Together, they become The Dreamer and The Critic, a system that creates ideas and validates them simultaneously.

This hybrid approach could power:

- Automated R&D ideation tools

- Generative scientific simulations

- AI-led hypothesis engines

- Synthetic content verification frameworks

We’re not far from AI that imagines a new technology and runs a simulation to test its feasibility instantly.

The Human Parallel

Human cognition works much the same way. We “hallucinate” possibilities before testing them. Every invention, novel, or theory began as a mental simulation, not a fact.

AI hallucinations are the machine version of this process.

“Einstein imagined riding on a beam of light—that was a hallucination. It also changed physics forever.”

The Final Thought

Hallucinations aren’t glitches in the matrix. They’re glimpses of what happens when machines start to dream.

The real challenge for AI researchers isn’t to eliminate hallucination but to guide it to teach machines when to imagine and when to reason.

Because when you think about it, creativity itself is a beautiful hallucination.

Pro Tip: Try This

Prompt:

“Invent a fictional scientific breakthrough that sounds real. Then explain how it could actually work in physics.”

You’ll see why hallucinations are the sandbox of AI creativity.